=======================================================================

Cybernetics in the 3rd Millennium (C3M) -- Volume 2 Number 1, Jan. 2003

Alan B. Scrivener --- www.well.com/~abs --- mailto:abs@well.com

=======================================================================

Why I Think Wolfram Is Right

www.amazon.com/exec/obidos/ASIN/1579550088/hip-20 )

(If you missed Part One see the C3M e-Zine archives -- the link is at

the end of this article.)

Psychedelic guru Timothy Leary's last book, "Chaos & Cyber Culture"

(1994), was a collection of his recent short writings.

( www.amazon.com/exec/obidos/ASIN/0914171771/hip-20 )

In one essay he talked about Hermann Hesse, whose "Siddhartha" (1922),

and "Steppenwolf" (1927) were counter-culture favorites in the 1960s:

Poor Hesse, he seems out of place up here in the high-tech,

cybercool, Sharp catalog, M.B.A., upwardly mobile 1990s.

But our patronizing pity for the washed-up Swiss sage may be

premature. In the avant-garde frontiers of the computer culture,

around Massachusetts Avenue in Cambridge, around Palo Alto, in

the Carnegie-Mellon A.I. labs, in the back rooms of the

computer-graphics labs in Southern California, a Hesse comeback

seems to be happening. This revival, however, is not connected

with Hermann's mystical, eastern writings. It's based on his

last, and least-understood work, "Magister Ludi, or The Glass

Bead Game."

( www.amazon.com/exec/obidos/ASIN/022461844X/hip-20 )

This book... is positioned a few centuries in the future, when

human intelligence is enhanced and human culture elevated by

a device for thought-processing called the glass bead game.

Up here in the Electronic Nineties we can appreciate what Hesse

did at the very pinnacle (1931-42) of the smoke-stack mechanical

age. He forecast with astonishing accuracy a certain

postindustrial device for converting thoughts to digital

elements and processing them. No doubt about it, the sage of

the hippies was anticipating an electronic mind-appliance that

would not appear on the consumer market until 1976.

I refer, of course, to that Fruit from the Tree of Knowledge

called the Apple Computer.

Hesse described the glass bead game as "a serial arrangement, an

ordering, grouping, and interfacing of concentrated concepts of

many fields of thought and aesthetics. ...the Game of games had

developed into a kind of universal language through which the

players could express values and set these in relation to one

another."

This sounds to me remarkably like Wolfram's vision of the

significance of Cellular Automata.

I don't think I've made it a secret that I think Wolfram is onto

something big here. In this issue I will address why I think he's

right, and why everybody doesn't get it.

WHY I THINK HE'S RIGHT

In increasing order of significance, I think Wolfram is right because:

1) many of his discoveries and assertions match my own intuitions

2) he's gotten some great results so far using his new methodology

3) his new methodology prescribes much future work (it gives the

graduate students something to do)

3) his new methodology may actually address the problem of

unsolvability which has plagued science since Newton

Intuition

Many of Wolfram's discoveries and assertions match my own intuitions.

For example:

* I've long believed that braids, rope, knots, and weaving are

somehow important to the understanding of cybernetics and

systems theory. (See Wolfram p. 874.)

* It has been clear to me for some time that even simple

substitution systems, such as the early text editor "ed"

and its variants dating back to minicomputers, can yield

complicated results. (See Wolfram p. 82 and p. 889.)

* Similarly, I have long believed that simple programs can

yield complex results, if only by playing with some of the

winners of "Creative Computing" Magazine's "two line basic

program" contest on the Apple II in the early 1980s.

* Because computers allow us to go way beyond our current theories

of mathematics and logic, computational experiments are essential

to further progress.

My mentor, Gregory Bateson, taught me at length that "logic is a

poor model of cause and effect." He elaborated this later in

"Mind and Nature: A Necessary Unity" (1979).

( www.amazon.com/exec/obidos/ASIN/1572734345/hip-20 )

For example, both classical and symbolic (Boolean) logic hold that

it is a "contradiction" and therefore illegal to assert "A equals

Not A." But if you take computer logic chips and wire them up so

that a "Not" gate's output goes back into its input, it doesn't

blow up like on "Star Trek"; it simply oscillates. Furthermore,

this kind of feedback of output to input is essential to creating

the most fundamental of computer circuits, the "flip flop," which

is a one-bit memory. See the description of the "Basic RS NAND

Latch" at:

www.play-hookey.com/digital/rs_nand_latch.html

This follows from the fact that logic has no concept of time.

In the C programming language this problem is resolved by

having two different types of equals:

A == !A

is a test, which always resolves as zero ("false"),

while:

A = !A

is a command, which sets A to its logical opposite. If

its initial value is 1 ("true) it becomes 0 ("false") and

vice-versa. Clearly, if even these simple constructs are

beyond the theories of mathematical logic, but trivial on

a computer, there are many places a computer can take you

which are "off the map" of theory.

Before I'd ever owned a computer, back in 1977, I visited my

friend Bruce Webster who had just borrowed $800 to buy a

programmable calculator. On a lark, we investigated what

happened if we took the cosine of the log of the absolute

value of a number, over and over. We found that, given a

starting value of 0.3, that the result converged quickly to

zero. What this meant we couldn't say. But we figured that

it was probably the case that no one had done the experiment

before, and we were quite sure there was no robust theory to

explain what we were doing.

What we were messing around with is what is called

"iterated maps," and it was a very young field in 1977.

In 1976 Robert May had published some of the first clues

to the existence of chaos using a similar methodology

to explore the "logistics equation" -- albeit more

systematically than we'd been. See:

astronomy.swin.edu.au/~pbourke/fractals/logistic/

Later, Benoit Mandelbrot used similar computational experiments

(in 2D) to discover the Mandelbrot set; see:

aleph0.clarku.edu/~djoyce/julia/julia.html

And earlier, Edward Lorenz used computational experiments

to find the first "strange attractor" in 3D; see:

astronomy.swin.edu.au/~pbourke/fractals/lorenz/

All of this is chronicled in James Gleick's wonderful book

for the lay reader, "Chaos: Making a New Science" (1987).

www.amazon.com/exec/obidos/ASIN/0140092501/hip-20

Wolfram represents the first attempt I have seen to

systematize the field of computational experiments and

to draw broad conclusions from the effort.

* In 1975 I read an amazing book by Gregory Bateson's daughter

(with Margaret Meade), Mary Catherine Bateson: "Our Own Metaphor;

A Personal Account of a Conference On the Effects of Conscious

Purpose On Human Adaptation" in which she described a conference

organized by her father.

( www.amazon.com/exec/obidos/ASIN/0394474872/hip-20 )

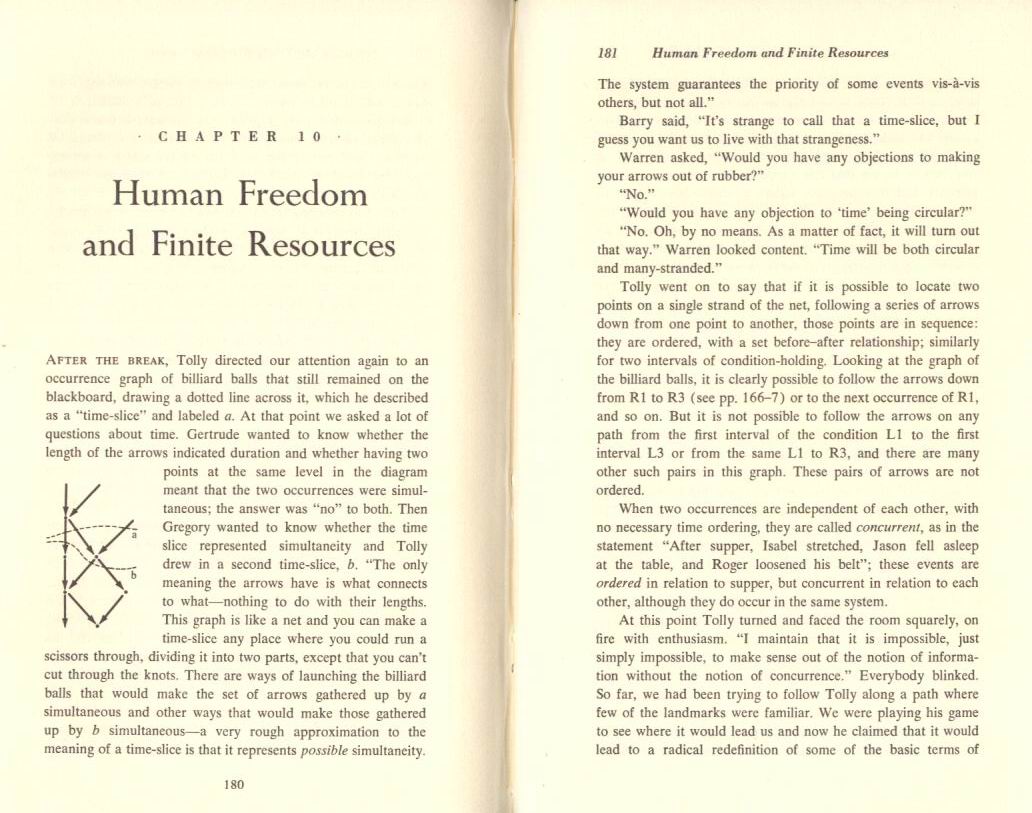

One session had cyberneticist Anatol "Tolly" Holt presenting

a notation for representing systems that change over time which

he called Petri Diagrams. From this notation he derived the

result that simultaneity is only meaningful when parts of a

system are in communication. It made perfect sense to me. See:

www.well.com/~abs/Cyb/4.669211660910299067185320382047/OOM1.jpg

for a portion of that argument. Wolfram asserts the same result

(p. 517) and again I find his intuition agrees with mine.

Bateson used to say quite often that the antidote for scientific

arrogance is lots of data running through your brain from "natural

history," by which he meant the way things are and have been in the

natural world. (He used the story of Job in the Bible as an example

of this. Job's sin was "piety," a kind of religious arrogance, and

God's response -- the voice out of the whirlwind, was "Knowest thou

the time when the wild goats of the rock bring forth? or canst thou

mark when the hinds do calve?") I say that Wolfram has made it his

business to pump an awful lot of the "natural history" of computation

through his brain, and it has cured him of much of the scientific

arrogance of our time, and sharpened his intuition dramatically.

www.well.com/~abs/Cyb/4.669211660910299067185320382047/OOM1.jpg

for a portion of that argument. Wolfram asserts the same result

(p. 517) and again I find his intuition agrees with mine.

Bateson used to say quite often that the antidote for scientific

arrogance is lots of data running through your brain from "natural

history," by which he meant the way things are and have been in the

natural world. (He used the story of Job in the Bible as an example

of this. Job's sin was "piety," a kind of religious arrogance, and

God's response -- the voice out of the whirlwind, was "Knowest thou

the time when the wild goats of the rock bring forth? or canst thou

mark when the hinds do calve?") I say that Wolfram has made it his

business to pump an awful lot of the "natural history" of computation

through his brain, and it has cured him of much of the scientific

arrogance of our time, and sharpened his intuition dramatically.

Results

As if to show that he didn't just spend ten years in a dark room

staring at cellular automata and vegging out, Wolfram produces

some remarkable results. For example, a new, simpler Turing Machine.

In one of the few mostly positive reviews of Wolfram's book,

Ray Kurzweil wrote:

What is perhaps the most impressive analysis in his book,

Wolfram shows how a Turing Machine with only two states

and five possible colors can be a Universal Turing Machine.

For forty years, we've thought that a Universal Turing

Machine had to be more complex than this. [Wolfram p. 707]

( www.kurzweilai.net/articles/art0464.html?printable=1 )

Wolfram also reveals that the "rule 30" cellular automaton, with a

single black cell as initial condition, produces very high quality

random bits down its center column. He uses well-accepted tests

for randomness to show that this source is far superior to any

other in commercial use, and reveals that this approach has been

the source of random numbers in Mathematica for a long time, with

no complaints. (p. 1084)

Wolfram also tackles the paradox of the 2nd Law of Thermodynamics.

Newton's equations of motion teach us that all the little

collisions between molecules in a fluid are reversible, so if you

do the classic experiment of removing the barrier in a tank of

half water and half fruit punch, wait for the red and clear fluids

to mix through diffusion, and then reverse the path of every molecule,

it should all go back to the partitioned state. But the 2nd Law says

entropy will always increase, so it CAN'T go back to the partitioned

state. This contradiction is said to lave lead to Bolzmann's suicide

in 1906. Nobel prize-winning chemist Ilya Prigogine addressed this

quandary in his 1984 book, "Order Out of Chaos: Man's New Dialogue

With Nature."

( www.amazon.com/exec/obidos/ASIN/0553343637/hip-20 )

Wolfram's approach is fundamentally similar, but more concise

(17 pages including diagrams) and, in my opinion, more rigorous

and yet easier to understand. (pp. 441-457)

But what I find to be his most remarkable result is his proof

that Cellular Automaton number 110 is Turing Complete; in other

words, it can be made to do any computation that is possible with

any digital computer (with unlimited time and memory). Like

Conway's "Life" before it, this simple system astonishes us by

matching the complexity of any algorithmic machine.

In addition, just to ground his results in reality, Wolfram shows

how a Cellular Automaton can almost exactly reproduce some of the

patterns on seashells.

Future Work for Grad Students

I've been told that in the "Source Citations Index," which maps all

citations in scientific literature, the most-cited work is the book

"The Structure of Scientific Revolutions" by Thomas S. Kuhn.

( www.amazon.com/exec/obidos/ASIN/0226458075/hip-20 )

Perhaps this is because Kuhn describes how new theories are resisted

by the scientific establishment, and every crackpot with a new idea

likes to point to the resistance he or she faces as evidence of their

genius. But, of course, hidden among the crackpots are the brilliant

new theories of tomorrow.

Kuhn is perhaps most famous for popularizing the phrase "paradigm

shift," and he describes how it usually takes the retirement of the

"old guard" for a new paradigm to be accepted. He also points out

that the real test of a new theory isn't if it is "true." All

scientific theories are eventually proved false; it's only matter

of time. No, the real test is if it provides new directions of

research for the graduate students. Of course, initially the

graduate students are not allowed to pursue the research. My own

taxonomy of the stages of acceptance of a new scientific theory

goes like this:

- hoots and catcalls

- boos and hisses

- forbidding the grad students to work on the theory

- associate professors sneaking peeks at the work covertly

- long official silence

- cheery admissions that everyone has known for some time

that the theory is right

In my lifetime I have seen these phases in the acceptance of

continental drift theory in geology, chaos theory in applied physics,

Bucky Fuller's geodesic geometry in chemistry, and the law of

increasing returns (the "Fax Effect") in economics. Currently it

seems to be going on with the Atkins Diet in medicine, and in the

commercial world with the adoption of the Linux operating system.

This is where I think Wolfram's contribution is really outstanding.

Some of you may recall the "Grand Challenges" in the late 1980s.

( www.cs.clemson.edu/~steve/Parlib/faq/gccommentary )

From "A Research and Development Strategy for High Performance

Computing," Executive Office of the President, Office of Science

and Technology Policy, November 20, 1987:

A "grand challenge" is a fundamental problem in science or

engineering, with broad applications, whose solution would be

enabled by the application of high performance computing

resources that could become available in the near future.

Examples of grand challenges are:

(1) Computational fluid dynamics for the design of hypersonic

aircraft, efficient automobile bodies, and extremely quiet

submarines, for weather forecasting for short and long

term effects, efficient recovery of oil, and for many

other applications;

(2) Electronic structure calculations for the design of new

materials such as chemical catalysts, immunological

agents, and superconductors;

(3) Plasma dynamics for fusion energy technology and for safe

and efficient military technology;

(4) Calculations to understand the fundamental nature of

matter, including quantum chromodynamics and condensed

matter theory;

(5) Symbolic computations including speech recognition,

computer vision, natural language understanding,

automated reasoning, and tools for design, manufacturing,

and simulation of complex systems.

I don't know about you, but I don't find all of these challenges

that grand. (Though number 5 has promise.) But Wolfram has

definitely thrown down the grandest challenge of all: to map out

the state space of all possible computations.

One thing I admire about Wolfram is that he is very careful to

delineate when he is asserting something is true -- he usually proves

it on the spot -- and when he is making a conjecture based on his

observations and intuition. Two of his conjectures strike me as

providing fertile ground for future research: his classification

of the four types of behaviors of computations, and his Principle

of Computational Equivalence.

Unfortunately, Wolfram doesn't give explicit names to his four classes

of behavior (p. 231). I would call them:

1) constant

2) oscillating

3) mild chaos

4) going ape

A promising research project would be to do a larger search through

the state-space of Cellular Automata (and related systems) with more

varied initial conditions, bigger memories and longer runs, to see

if any other behavioral classes can be identified.

The Principle of Computational Equivalence asserts that systems fall

basically into two categories: trivial, and Turing-complete. Once

a system is Turing-complete it can be arbitrarily complex, or --

depending on what you believe about the fundamental limits of

human and other natural intelligence -- as complex as anything

will ever be.

This raises two questions: Is there an intermediate level of

complexity beyond the trivial but short of the Turing machine?

Is there a higher level of complexity beyond the Turing machine?

Ultimately the answer to this last question will be found

in determining whether human intelligence occupies another level

beyond the algorithmic. Roger Penrose has tackled this question

(unconvincingly) in "The Emperor's New Mind: Concerning Computers,

Minds, and the Laws of Physics" (1989),

( www.amazon.com/exec/obidos/ASIN/0192861980/hip-20 )

and it remains an open question, at least to me. A good starting

point is: why can a human determine that the Godel String -- which

asserts "this statement cannot be proven" -- is true, even though

it can't be proved (or determined to be true) by a Turing machine?

(Penrose's intuition is that it has to do with quantum gravity, but

he offers little more than hand-waving to support this.)

Get to work, grad students!

Unsolvability

I have found that approximately 100% of non-scientists, and a

majority of scientists, seem unaware of the "dirty little secret"

of quantitative science: that all the really important problems

involve nonlinear Ordinary Differential Equations (ODEs) -- or worse

-- and that in principle these equations are mostly unsolvable.

The way this manifests for most students is that they are taught

Newtonian mechanics -- typically in 9th grade -- but told "we will

ignore friction for now." In the lab, great efforts are made to

keep friction out: dry ice pucks are used, even though we never

encounter them "in the wild." But if you follow a physics curriculum

through graduate school you eventually find that the friction question

remains unsolved in the general case no matter how far you go. Sure,

for the computation of the "terminal velocity" of a falling body you

learn the solution for a perfect sphere, but to this day aerospace

companies like Lockheed-Martin, Northrop-Grumman, TRW and Boeing use

the biggest supercomputers they can afford to compute the terminal

velocities of re-entering spacecraft and missile shapes on a

case-by-case basis. The scandalous thing is that most high school

physics teachers don't know this, and glibly promise students that

they will be taught how to solve problems with friction "later."

How did we get here? Let's briefly review the history of quantative

science. An excellent summary is found in Dr. Alan Garfinkel's

groundbreaking paper, "A Mathematics for Physiology" (1983),

American Journal of Physiology 245: Regulatory, Integrative and

Comparative Physiology 14: R455-66. He describes Newton's success

at inventing the Ordinary Differential Equation form, defining

the two-body gravitational system using eight ODEs, and solving

the equations to prove, for example, that Kepler's three laws of

planetary motion follow from the inverse square law of gravitational

force (Newton's Law of Gravity) and the definition Force equals mass

times acceleration (F=ma). Garfinkel goes on:

...the beauty of Newton's solution to the two-body problem did

not seem to be extendable. In the typical cases, even in

systems only slightly more complex than the two-body problem,

one could write equations based on first principles, but then

it was completely impossible to say what motions would ensue,

because the equations could not be solved. A classic case was

the three-body problem. This is a more realistic model of the

solar system, because it can take into account the nonnegligible

gravitational effects of Jupiter. A great deal of attention

was focused on this problem, because it expressed the stability

of the solar system, a question that had profound metaphysical,

even religious, consequences. Mathematicians attempted to pose

and answer this, some spurred on by a prize offered by King

Oscar of Sweden. Several false proofs were given (and

exposed), but no real progress was made for 150 years. The

situation took a revolutionary turn with the work of Poincare

and Bruns in 1890, which showed that the equations of the

three-body problem have no analytic solution.

The story continues with Poincare inventing topology to answer

qualitative questions (like "is this orbit stable?") about systems

with no analytical solution. (And I always thought topology was

invented to determine whether or not you could morph a coffee cup

into a donut.)

This problem has persisted, and dogged attempts to extend quantitative

science into other realms. In "General System Theory: Foundations,

Development, Applications" (1968) by Ludwig Von Bertalanffy,

( www.amazon.com/exec/obidos/ASIN/0807604534/hip-20 )

A. Szent-Gyorgyi is quoted as relating in 1964:

[When I joined the Institute for Advanced Study in Princeton]

I did this in the hope that by rubbing elbows with those great

atomic physicists and mathematicians I would learn something

about living matters. But as soon as I revealed that in any

living system there are more than two electrons, the physicists

would not speak to me. With all their computers they could

not say what the third electron might do. The remarkable

thing is that it knows exactly what to do. So that little

electron knows something that all the wise men of Princeton

don't, and this can only be something very simple.

Bertalanffy also reproduces this table of the difficulty of

solving equations, which is pretty grim:

www.well.com/~abs/equations.html

In his seminal book "Cybernetics, or Control and Communication in

the Animal and the Machine" (1948),

( www.amazon.com/exec/obidos/ASIN/026273009X/hip-20 )

Norbert Wiener tells the story (in the preface to the second,

1961 edition):

When I came to M.I.T. around 1920, the general mode of putting

the questions concerning non-linear apparatus was to look for a

direct extension of the notion of impedance which would cover

linear as well as non-linear systems. The result was that the

study of non-linear electrical engineering was getting into a

state comparable with that of the last stages of the Ptolemaic

system of astronomy, in which epicycle was piled on epicycle,

correction upon correction, until a vast patchwork structure

ultimately broke down under its own weight.

Interestingly, Wiener also reveals that he made early suggestions

for the construction of a digital computer when analog computers

-- especially integrators, electronic analogs to Lord Kelvin's

disk-globe-and-cylinder -- were failing him. His original

justification for the construction of a general purpose calculating

device was to simulate unsolvable ODEs. (And I always though the

computer was invented to crack German codes and later to calculate

ballistics tables for big Naval guns.)

Another take on this history is found in a textbook on control

system design, "Linear Control Systems" (1969), by James Melsa

and Donald Schultz. (It is long out of print and I couldn't find a

reference to it on Amazon.com, but a more recent revised edition

with the same title, published in 1992, by Charles E. Rohrs, James

Melsa, and Donald Schultz -- also out of print, alas -- is listed:

www.amazon.com/exec/obidos/ASIN/0070415250/hip-20 )

From the 1969 edition:

In [1750] Meikle invented a device for automatically steering

windmills into the wind, and this was followed in 1788 by Watt's

invention of the flywheel governor for regulation of the steam

engine.

However, these isolated inventions cannot be construed as

reflecting the application of any automatic control THEORY.

There simply was no theory although, at roughly the same time

as Watt was inventing the flywheel governor, both Laplace and

Fourier were developing the two transform methods that are now

so important in electrical engineering and in control in

particular. The final mathematical background was laid by

Chaucy (1789-1857), with his theory of the complex variable...

Although... it was not until about 75 years after his death

that an actual control theory began to evolve. Important

early papers were "Regeneration Theory," by Nyquist, 1932,

and "Theory of Servomechanisms," by Hazen, 1934. World War

II produced an ever-increasing need for working automatic

control systems and this did much to stimulate the development

of a cohesive control theory. Following the war a large number

of linear-control-theory books began to appear, although the

theory was not yet complete. As recently as 1958 the author

of a widely-used control text stated in his preface that

"Feedback control systems are designed by trial and error."

With the advent of new or modern control theory about 1960,

advances have been rapid and of far-reaching consequence.

The basis of much of this modern theory is highly mathematical

in nature and almost completely oriented to the time domain.

A key idea is the use of state-variable-system representation

and feedback, with matrix methods used extensively to shorten

the notation.

So what we see is that while virtually no progress was made on

solving non-linear ODEs, more and more sophisticated analytic

techniques were being developed for the linear cases. This is

great if you have the luxury of choosing which problems you will

solve. (Theoreticians it seems have long looked down their noses

at the poor wretches doing APPLIED mathematics, who have to take

their problems as they come.) The danger in this was that a

whole generation of scientists and engineers were building up

intuitions based only on linear systems.

An extremely notable event occurred with the investigation of the

so-called "Fermi-Pasta-Ulam" problem at Los Alamos around 1955.

A copy of their landmark paper can be found at:

www.osti.gov/accomplishments/pdf/A80037041/A80037041.pdf

More recently it has been called the Fermi-Ulam-Pasta problem;

I don't know if this is because -- though Fermi, the "grand old

man" of the group, passed away in 1954 -- Ulam went on to do some

related work for years, including early investigations into iterated

maps and Cellular Automata. Or maybe it's so the problem can be

called "FUP" for short.

After the first electronic computers were built there was a

long-running debate between those who wanted to do things that

could always be done, only now they were faster, and those who

wanted to do things that were previously impossible. The

former group, including the Admirals who wanted those ballistics

tables, usually had the best funding. But finally, probably as a

prize for good work in inventing A- and H-bombs, the folks at Los

Alamos were permitted to use their "MANIAC I" computer to do some

numerical experiments on the theory of non-linear systems, starting

in 1954. Fermi and company wanted to study a variety of non-linear

systems, but for starters they picked a set of masses connected by

springs, like so:

+----+ +----+ +----+ +----+

| |_/\/\_| |_/\/\_| |_/\/\_| |

| | | | | | | |

+----+ +----+ +----+ +----+

Here I show 4 masses connected by 3 springs. The FUP group first

modeled 16 masses, and later 64 masses. They were going to displace

a mass vertically and then observe the vibrations of the system.

If the springs were linear, so that the force was exactly

proportional to the spring's displacement:

F = -kx

by some constant factor "k," the system was fully understood.

It had vibrational modes which could be studied analytically

using Fourier and Laplace's transforms. The physicists had grown

up with their intuitions shaped by these techniques. They explained:

The corresponding Partial Differential Equation (PDE)

obtained by letting the number of particles become

infinite is the usual wave equation plus non-linear terms

of a complicated nature.

They knew that the linear case had vibratory "modes" which

would each exhibit a well-defined portion of the system's energy,

independent of each other. They also knew that non-linear systems

tended to be "dissipative," in that the energy was expected to

"relax" through the modes, until it was fairly equally shared

between them. This was called "thermalization" or "mixing."

Of course, computer time was horrendously expensive at this point,

and more importantly the paradigm was still analytic, so the goal

was to do just a few experiments, use the results to "tweak" the

linear case somehow to generalize it to non-linear, and continue

as they were accustomed. But when they plugged only slightly

non-linear force equations into their computer, they were astonished

at the results. In fact, they first suspected hardware or software

errors. But ultimately they were forced to report:

Let us say here that the results of our computations show

features which were, from the beginning, surprising to us.

what they saw instead was the energy being passed around by the

first three modes only, dominating one and then another in turn,

until 99% of it ended back in a mode where it had been before;

this pattern repeated nearly periodically for a great number

of iterations. Nothing in their intuition had prepared them for

this.

The title of the paper was:

STUDIES OF NONLINEAR PROBLEMS, I

and the group stated that they had a whole series of numerical

experiments planned:

This report is intended to be the first one of a series

dealing with the behavior of certain nonlinear physical

systems where the nonlinearity is introduced as a perturbation

to a primarily linear problem....

Several problems will be considered in order of increasing

complexity. This paper is devoted to the first one only.

The approach they were taking was intended to enhance mainstream

"Ergodic Theory." The web site:

www2.potsdam.edu/MATH/madorebf/ergodic.htm

offers the best definition I have found: "In simple terms Ergodic

theory is the study of long term averages of dynamical systems."

This is a little odd when you consider that the "average" position

of the Earth in its annual orbit is close to being at the center

of the Sun.

One of the things that the FUP group realized in retrospect was

that they were rediscovering "solitons" which are non-dissipative

non-linear waves. According to:

physics.usc.edu/~vongehr/solitons_html/solitons.html

solitons have this history:

A solitary wave was firstly discussed in 1845 by J. Scott Russell

in the "Report of the British Association for the Advancement of

Science". He observed a solitary wave traveling along a water

channel. The existence and importance was disputed until D.J.

Korteweg and G. de Vries gave a complete account of solutions to

the non-linear hydrodynamical [partial differential] equation in

1895.

Apparently this mathematical basis for solitons was mostly

forgotten until the FUP group found the phenomenon in their

simulations and revived interest. (Solitons also explain tsunamis

or so-called "tidal waves.") The web site:

www.ma.hw.ac.uk/solitons/

has further information about solitons, including the story of how

the canal was recently renamed in honor of Russell, how in 1995

the wave he observed was recreated on the canal, how solitons are

now widely used in fiber optics because they don't dissipate, and

how a fiber optic channel now runs along the path of that very canal.

(Let me say here parenthetically that I just love being right.

I concluded that the FUP experiment was important as a precursor to

Wolfram's work BEFORE reading his Notes, in which he makes the same

observation., on page 879. There is also discussion on Wolfram's

site at:

scienceworld.wolfram.com/physics/Fermi-Pasta-UlamExperiment.html

And a recent book, "The Genesis of Simulation in Dynamics: Pursuing

the Fermi-Pasta-Ulam Problem" by Thomas P. Weissert

www.amazon.com/exec/obidos/ASIN/0387982361/hip-20

delves deeply into the problem from a modern perspective, though

I haven't read it.)

So what exactly does FUP have to do with "A New Kind of Science"

anyway? As Sir Winston Churchill once said, "Men occasionally

stumble over the truth, but most of them pick themselves up and

hurry off as if nothing ever happened." The curious thing is that

there was never a "STUDIES OF NONLINEAR PROBLEMS, II." I really

don't know why. Was their result too "weird" to get any more

funding? Did it scare them off? Did Fermi's death derail them?

Can we credit Ulam with carrying on the work in his own way?

But one thing is clear, their work suggested that a methodical

examination of ODEs and PDEs was in order to bring clarity to the

extremely muddled field of nonlinear analysis. I sort of assumed

that sooner or later this happened. But Wolfram tells us (p. 162):

Considering the amount of mathematical work that has been

done on partial differential equations, one might have thought

that a vast range of different equations would by now have been

studied. But in fact almost all of the work -- at least in one

dimension -- has been concentrated on just ... three specific

equations ... together with a few others that are essentially

equivalent to them.

(For some associated illustrations see:

www.wolframscience.com/preview/nks_pages/?NKS0165.gif )

Well! The lesson of FUP has still not been absorbed!

Let me try and explain this another way. Prior to Darwin

the argument for an omniscient and omnipotent creator went

something like this: If I am able to learn to walk, let's say,

I must have an innate ability-to-learn-to-walk, which must

have been designed in by a creator who knew how to create

such an ability. Alternately, I might have learned how to

learn how to walk. But then, I must have had an innate

ability-to-learn-how-to-learn-how-to-walk, which must have

been designed in by an even wiser creator who knew how to

design such an ability to learn how to learn. And so on.

Darwin suggested that I might instead just be lucky, or at

least descended from lucky ancestors. And all of my potential

ancestors didn't have to have this luck -- only the survivors.

Experiments in genetic algorithms have already shown that computer

programs can be "bred" to do things they don't intrinsically

"know how to do." One of the reasons this works is that there

are only so many things that a computer program can do in the

first place. If one of those things can solve a problem

presented to the environment of "breeding" genetic algorithms,

one of them is bound to find it sooner or later.

Recall what A. Szent-Gyorgyi said: "So that little electron

knows something that all the wise men of Princeton don't, and

this can only be something very simple." The problems we can't

solve analytically we may be able to solve with luck, especially

if our computers allow us to create billions, trillions, or more

opportunities for the luck to appear.

WHY EVERYBODY DOESN'T GET IT

It has been hard for me to find reviews of Wolfram's book

that praise him. A typical review appeared in "The New York

Review of Books" on October 24, 2002: "Is the Universe a Computer?"

by Steven Weinberg:

I am an unreconstructed believer in the importance of the word,

or its mathematical analogue, the equation. After looking at

hundreds of Wolfram's pictures, I felt like the coal miner in

one of the comic sketches in "Beyond the Fringe," who finds the

conversation down in the mines unsatisfying: "It's always just

'Hallo, 'ere's a lump of coal.'"

(For the whole thing see:

www.nybooks.com/articles/15762

For a thorough list of reviews available on-line, see:

www.math.usf.edu/~eclark/ANKOS_reviews.html )

The most thoughtful review I have found is "A Mathematician

Looks at Wolfram's New Kind of Science," by Lawrence Gray. He

is careful, thorough, and not too unkind, but I think he gets it

wrong.

Why does this book inspire so much opposition? A pat answer would

be that Wolfram is wrong side of a paradigm shift, and this is just

par for the course. The two most common objections to a new

breakthrough are "this is crazy" and "there's nothing new here."

Consider that when Einstein published his Theory of Relativity (the

Special variety) the complaint was made (besides that it was crazy)

that he was just collating a bunch of results from others. For

example, it was already known that the Lorenz contractions --

shrinking in the direction of motion near the speed of light --

could be derived from Maxwell's electromagnetics equations, already

half a century old. But by systematizing and integrating a bunch

of material Einstein paved the way for new results, new experiments,

and new theories.

Certainly Wolfram faces the problem that most of his contemporaries

have not worked as he has so extensively with computational

experiments, and so lack his intuition on the subject. I think

I share some of his intuitions because I've been performing my own

experiments for my whole adult life. I've simulated solitaire

games on a mainframe with punch cards, modeled Ross Ashby's

"homeostat" learning machine on a minicomputer time-sharing system,

observed the bizarre behavior of self-modifying code on an early

personal computer, explored the Mandelbrot set on a supercomputer

and the Lorenz attractor on a powerful graphical workstation, and

wandered through problems in number theory on a Windows system.

I've see some of the same chimera he has, and I trust his hunches

nearly as much as my own.

But there's something more here in the opposition to Wolfram.

Early in the Notes (p. 849), he says:

Clarity and modesty.

There is a common style of understated scientific writing to

which I was once a devoted subscriber. But at some point

I discovered that more significant results are usually

incomprehensible if presented in this style. For unless one

has a realistic understanding of how important something is,

it is very difficult to place or absorb it. And so in writing

this book I have chosen to explain straightforwardly the

importance I believe my various results have. Perhaps I might

avoid some criticism by a greater display of modesty, but the

result would be a drastic reduction in clarity.

Recall how John Lennon was crucified by the press when he said

of the Beatles, "Now we're more famous than Jesus." Even Jesus

had John the Baptist to declare His divinity instead of proclaiming

it personally. I think Wolfram would have benefited by having some

sort of "shill" to trumpet the profundity of his work, while he stood

to the side and said "aw shucks." But it's too late for that now.

It has been said that coincidence is a researcher's best ally.

I coincidentally happened to be reading the science fiction novel

"Contact" by Carl Sagan while working on this essay.

( www.amazon.com/exec/obidos/ASIN/0671004107/hip-20 )

I found a few things that are relevant. A religious leader is

talking to a scientist about the implications of a message from

the intelligent aliens near the star Vega which has been detected

on earth:

"You scientists are so shy," Rankin was saying. "You love to

hide your light under a bushel basket. You'd never guess what's

in these articles from the titles. Einstein's first work on the

Theory of Relativity was called 'The Electrodynamics of Moving

Bodies.' No E=mc squared up front. No sir. 'The Electrodynamics

of Moving Bodies.' I suppose if God appeared to a whole gaggle

of scientists, maybe at one of those big Association meetings,

they'd write something all about it, and call it, maybe, 'On

Spontaneous Dendritoform Combustion in Air.' They'd have lots

of equations, they'd talk about 'economy of hypothesis'; but

they'd never say a word about God."

Later when the message is decoded, it contains instructions for

building an elaborate machine of unknown purpose:

For the construction of one component, a particularly intricate

set of organic chemical reactions was specified and the resulting

product and the resulting product was introduced into a swimming

pool-sized mixture... The mass grew, differentiated, specialized,

and then just sat there -- exquisitely more complex than anything

humans knew how to build. It had an intricately branched network

of fine hollow tubes, through which perhaps some fluid was to

circulate. It was colloidal, pulpy, dark red. It did not make

copies of itself, but it was sufficiently biological to scare a

great many people. They repeated the procedure and produced

something apparently identical. How the end product could be

significantly more complicated than the instructions that went

into building it was a mystery.

Here Sagan, himself a scientist, puts his finger on two of Wolfram's

problems: he isn't shy enough, and he challenges the widely held

belief that "the end product could [not] be significantly more

complicated than the instructions that went into building it."

Recently I interviewed my friend and associate Art Olson at Scripps

Research Institute for a future C3M column. He was very interested

in my reaction to Wolfram. I told him how I thought he provided

directions for research by the grad students. I said I thought

biology -- especially genomics -- could get a lot of out of his

approach. He challenged me, "How would I advise a grad student to

continue this research? What exactly would I tell him to do?"

I suggested that, since Scripps has always seemed to have the

latest and greatest "big iron" in supercomputers, he have a grad

student continue the search for other categories of Cellular

Automata behaviors, as I described above. "And how would this

benefit biology?" hew asked.

I've given this question a lot of thought. I came up with

this metaphor. America used to be a nation of farmers connected

by gravel roads. It was not too tough a job to convince farmers

to improve the roads that lead directly from their farms to market.

But the U.S. highway system was much harder to sell. Why would

one rural region want to be connected to another with paved roads,

let alone all the way to Chicago? Maybe you might say, "So you

can order from the Sears Catalog."

The farmer might reply, "But I can already order from the Sears

Catalog."

"Well, the stuff would get here sooner, and shipping would cost less."

"Well, I can wait, and besides I don't really order that much from

the catalog anyway."

"But you would if it were cheaper and faster!"

And so on. Obviously, in retrospect, the U.S. highway system has

been an enormous boon to American farmers. But is always hard to

pitch the benefits of general infrastructure improvements. What

Wolfram proposes has the potential of great benefits to the general

scientific and mathematical infrastructure, but it will be hard for

a while to find specific champions.

Perhaps this metaphor is a little unfair. Art would certainly agree

that general improvements in -- say -- processor speed would benefit

biology. A better metaphor might be this: a regional park near my

house has a paved path through the oak valley, since that's where

it's easiest to put a trail, and besides, it's a nice, shady place

to walk. Along the path there are signs to identify the native plant

species. If no one had ever been to the mountain top nearby, and

someone were to propose cutting a path through the chaparral that

lead up to the peak, critics might say "Why bother? Stick to valley

because the brush is impenetrable. And besides, there's probably

nothing new up there. Stay down here where we have all the species

labeled." Well, maybe there isn't anything new up there, but there's

only one way to find out.

The late Alfred North Whitehead is said to have once introduced a

lecture by Bertrand Russell on quantum mechanics, and afterwards to

have thanked Russell for his lecture, "and especially for leaving

the vast darkness of the subject unobscured."

I would like to thank Stephen Wolfram leaving the vast darkness

of his subject unobscured.

At the risk of mixing my metaphors, I say, let's mount a few

expeditions into that darkness.

======================================================================

newsletter archives:

www.well.com/~abs/Cyb/4.669211660910299067185320382047/

======================================================================

Privacy Promise: Your email address will never be sold or given to

others. You will receive only the e-Zine C3M unless you opt-in to

receive occasional commercial offers directly from me, Alan Scrivener,

by sending email to abs@well.com with the subject line "opt in" -- you

can always opt out again with the subject line "opt out" -- by default

you are opted out. To cancel the e-Zine entirely send the subject

line "unsubscribe" to me. I receive a commission on everything you

purchase during your session with Amazon.com after following one of my

links, which helps to support my research.

======================================================================

Copyright 2003 by Alan B. Scrivener

www.well.com/~abs/Cyb/4.669211660910299067185320382047/OOM1.jpg for a portion of that argument. Wolfram asserts the same result (p. 517) and again I find his intuition agrees with mine. Bateson used to say quite often that the antidote for scientific arrogance is lots of data running through your brain from "natural history," by which he meant the way things are and have been in the natural world. (He used the story of Job in the Bible as an example of this. Job's sin was "piety," a kind of religious arrogance, and God's response -- the voice out of the whirlwind, was "Knowest thou the time when the wild goats of the rock bring forth? or canst thou mark when the hinds do calve?") I say that Wolfram has made it his business to pump an awful lot of the "natural history" of computation through his brain, and it has cured him of much of the scientific arrogance of our time, and sharpened his intuition dramatically.